Quesma Releases OTelBench: Independent Benchmark Reveals Frontier LLMs Struggle with Real-World SRE Tasks

New benchmark shows top LLMs achieve only 29% pass rate on OpenTelemetry instrumentation, exposing the gap between coding ability and real-world SRE work.

OTelBench shows that while LLMs are impressive at generating code snippets, they're not yet capable of the cross-cutting reasoning required for production engineering.”

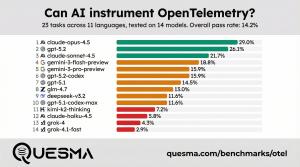

WARSAW, POLAND, January 20, 2026 /EINPresswire.com/ -- Quesma, Inc. announced the release of OTelBench, the first comprehensive benchmark for evaluating LLMs on OpenTelemetry instrumentation tasks. The open-source dataset tests 14 state-of-the-art models across 23 real-world tasks in 11 programming languages, revealing significant gaps in AI's ability to handle production-grade Site Reliability Engineering (SRE) work.— Jacek Migdał, founder of Quesma

While frontier LLMs have demonstrated impressive coding capabilities, the benchmark reveals a stark reality: the best-performing model, Claude Opus 4.5, achieved only a 29% pass rate on OpenTelemetry instrumentation tasks, compared to 80.9% pass rate in the SWE-Bench. This gap highlights a critical distinction between writing code and performing the complex, cross-cutting engineering work required for production systems.

The $1.4 Million Per Hour Problem

Enterprise outages cost an average of $1.4 million per hour, making production visibility mission-critical. Distributed tracing, the gold standard for debugging complex microservices, allows teams to link user actions to every underlying service call. However, implementing this visibility remains difficult, with 39% of organizations citing complexity as their top observability obstacle. OpenTelemetry has emerged as the industry standard with backing from 1,100+ organizations, yet configuring it correctly remains a major source of toil for SRE teams.

Fundamental Limitations Exposed

The benchmark tested models on agentic coding tasks where they were given source code from realistic applications, an interactive Linux terminal, and clear instrumentation objectives. The results revealed several critical failure modes:

Context propagation, passing trace context between services to maintain parent-child span relationships, proved to be an insurmountable barrier for most models. This is particularly concerning because context propagation is fundamental to distributed tracing.

"The backbone of the software industry consists of complex, high-scale production systems with mission-critical reliability, and seasoned engineers are architecting, evolving, and troubleshooting them," said Jacek Migdał, founder of Quesma. "OTelBench shows that while LLMs are impressive at generating code snippets, they're not yet capable of the cross-cutting reasoning and sustained problem-solving required for production engineering. This gap matters because many vendors are marketing AI SRE solutions with bold claims but no independent verification. We need benchmarks like this to separate reality from hype."

Language Ecosystems Matter

Success rates varied dramatically across programming languages, revealing that AI generalization is far weaker than human engineers. Models had some moderate success with Go and, quite surprisingly, C++. A few tasks were completed for JavaScript, PHP, .NET, and Python. Just a single model solved a single task in Rust. None of the models solved a single task in Swift, Ruby, or (to our biggest surprise, due to a build issue) - Java.

Why This Matters for AI Development

OTelBench reveals several reasons why OpenTelemetry instrumentation challenges current LLMs:

- Reliability-critical applications reside in private repositories at companies like Apple, Airbnb, and Netflix, limiting training data.

- Instrumentation requires cross-cutting changes across codebases, rather than sequential additions.

- Some tasks required 50+ commands over 10+ minutes. Models consistently performed worse as tasks lengthened.

Migdał added, "AI SRE in 2026 is what DevOps Anomaly Detection was in 2016—lots of marketing, huge budgets, but lacking independent benchmarks. Just as SWE-Bench became the standard for coding evaluation, we need SRE-style benchmarks to determine what actually works. That's why we're releasing OTelBench as open-source: to create a North Star for navigating the AI hype and to enable the community to track real progress."

A Path Forward

Despite the challenges, the benchmark reveals promising signals. Claude Opus 4.5, GPT-5.2, and Gemini 3 models show capability on specific tasks, with go-otel-microservices-traces reaching a 52% pass rate. With more environments for Reinforcement Learning with Verified Rewards, OpenTelemetry instrumentation appears to be a solvable problem for future AI systems.

Until then, organizations requiring distributed tracing across services should expect to write that code themselves—or work alongside AI assistants that understand their limitations.

OTelBench is available today as an open-source project at https://quesma.com/benchmarks/otel/, enabling researchers and practitioners to reproduce results and contribute additional test cases.

Lucie Šimečková

Quesma

press@quesma.com

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.